使用 Tacotron2 进行文本转语音

作者: Yao-Yuan Yang, Moto Hira

概述

本教程展示了如何使用 torchaudio 中的预训练 Tacotron2 模型构建文本转语音(TTS)管道。

文本转语音管道的流程如下:

-

文本预处理

首先,输入文本被编码为符号列表。在本教程中,我们将使用英文字符和音素作为符号。

-

频谱图生成

从编码后的文本生成频谱图。我们使用

Tacotron2模型来完成这一步骤。 -

时域转换

最后一步是将频谱图转换为波形。从频谱图生成语音的过程也称为声码器(Vocoder)。在本教程中,使用了三种不同的声码器:

WaveRNN、GriffinLim和 Nvidia 的 WaveGlow。

下图展示了整个流程。

所有相关组件都打包在 torchaudio.pipelines.Tacotron2TTSBundle 中,但本教程还将深入探讨其背后的实现过程。

准备工作

首先,我们安装必要的依赖项。除了 torchaudio 之外,还需要 DeepPhonemizer 来执行基于音素的编码。

%%bash

pip3installdeep_phonemizer

import torch

import torchaudio

torch.random.manual_seed(0)

device = "cuda" if torch.cuda.is_available() else "cpu"

print(torch.__version__)

print(torchaudio.__version__)

print(device)

2.6.0

2.6.0

cuda

import IPython

import matplotlib.pyplot as plt

文本处理

基于字符的编码

在本节中,我们将介绍基于字符编码的工作原理。

由于预训练的 Tacotron2 模型需要特定的符号表集合,torchaudio 中也提供了相同的功能。不过,我们首先将手动实现编码,以帮助理解。

首先,我们定义符号集合 '_-!\'(),.:;? abcdefghijklmnopqrstuvwxyz'。然后,我们将输入文本中的每个字符映射到符号表中对应符号的索引。不在表中的符号将被忽略。

symbols = "_-!'(),.:;? abcdefghijklmnopqrstuvwxyz"

look_up = {s: i for i, s in enumerate(symbols)}

symbols = set(symbols)

def text_to_sequence(text):

text = text.lower()

return [look_up[s] for s in text if s in symbols]

text = "Hello world! Text to speech!"

print(text_to_sequence(text))

[19, 16, 23, 23, 26, 11, 34, 26, 29, 23, 15, 2, 11, 31, 16, 35, 31, 11, 31, 26, 11, 30, 27, 16, 16, 14, 19, 2]

如上所述,符号表和索引必须与预训练的 Tacotron2 模型所期望的相匹配。torchaudio 提供了与预训练模型相同的转换。您可以按照以下方式实例化并使用该转换。

processor = torchaudio.pipelines.TACOTRON2_WAVERNN_CHAR_LJSPEECH.get_text_processor()

text = "Hello world! Text to speech!"

processed, lengths = processor(text)

print(processed)

print(lengths)

tensor([[19, 16, 23, 23, 26, 11, 34, 26, 29, 23, 15, 2, 11, 31, 16, 35, 31, 11,

31, 26, 11, 30, 27, 16, 16, 14, 19, 2]])

tensor([28], dtype=torch.int32)

注意:我们手动编码的输出与 torchaudio 的 text_processor 输出匹配(这意味着我们正确重新实现了库内部的功能)。它可以接受文本或文本列表作为输入。当提供文本列表时,返回的 lengths 变量表示输出批次中每个处理后的 token 的有效长度。

中间表示可以按如下方式获取:

print([processor.tokens[i] for i in processed[0, : lengths[0]]])

['h', 'e', 'l', 'l', 'o', ' ', 'w', 'o', 'r', 'l', 'd', '!', ' ', 't', 'e', 'x', 't', ' ', 't', 'o', ' ', 's', 'p', 'e', 'e', 'c', 'h', '!']

基于音素的编码

基于音素的编码与基于字符的编码类似,但它使用基于音素的符号表和一个G2P(字素到音素)模型。

G2P模型的细节不在本教程的讨论范围内,我们只会看一下转换的过程。

与基于字符编码的情况类似,编码过程需要与预训练的Tacotron2模型所训练的内容相匹配。torchaudio提供了一个接口来创建这个过程。

以下代码展示了如何创建和使用该过程。在幕后,使用DeepPhonemizer包创建了一个G2P模型,并获取了DeepPhonemizer作者发布的预训练权重。

bundle = torchaudio.pipelines.TACOTRON2_WAVERNN_PHONE_LJSPEECH

processor = bundle.get_text_processor()

text = "Hello world! Text to speech!"

with torch.inference_mode():

processed, lengths = processor(text)

print(processed)

print(lengths)

0%| | 0.00/63.6M [00:00<?, ?B/s]

0%| | 128k/63.6M [00:00<01:32, 722kB/s]

1%| | 384k/63.6M [00:00<00:57, 1.15MB/s]

2%|2 | 1.50M/63.6M [00:00<00:18, 3.59MB/s]

8%|7 | 4.88M/63.6M [00:00<00:05, 12.0MB/s]

14%|#3 | 8.62M/63.6M [00:00<00:03, 16.3MB/s]

20%|#9 | 12.6M/63.6M [00:00<00:02, 22.6MB/s]

27%|##7 | 17.2M/63.6M [00:01<00:01, 24.7MB/s]

33%|###3 | 21.1M/63.6M [00:01<00:01, 28.1MB/s]

41%|#### | 26.0M/63.6M [00:01<00:01, 29.0MB/s]

47%|####6 | 29.9M/63.6M [00:01<00:01, 31.2MB/s]

54%|#####4 | 34.6M/63.6M [00:01<00:00, 30.5MB/s]

60%|#####9 | 38.1M/63.6M [00:01<00:00, 31.7MB/s]

68%|######7 | 43.0M/63.6M [00:01<00:00, 36.5MB/s]

73%|#######3 | 46.8M/63.6M [00:02<00:00, 31.2MB/s]

79%|#######8 | 50.2M/63.6M [00:02<00:00, 32.5MB/s]

85%|########5 | 54.4M/63.6M [00:02<00:00, 30.1MB/s]

92%|#########1| 58.2M/63.6M [00:02<00:00, 32.3MB/s]

97%|#########7| 61.9M/63.6M [00:02<00:00, 27.7MB/s]

100%|##########| 63.6M/63.6M [00:02<00:00, 25.0MB/s]

/pytorch/audio/ci_env/lib/python3.10/site-packages/dp/model/model.py:306: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

checkpoint = torch.load(checkpoint_path, map_location=device)

/pytorch/audio/ci_env/lib/python3.10/site-packages/torch/nn/modules/transformer.py:379: UserWarning: enable_nested_tensor is True, but self.use_nested_tensor is False because encoder_layer.self_attn.batch_first was not True(use batch_first for better inference performance)

warnings.warn(

tensor([[54, 20, 65, 69, 11, 92, 44, 65, 38, 2, 11, 81, 40, 64, 79, 81, 11, 81,

20, 11, 79, 77, 59, 37, 2]])

tensor([25], dtype=torch.int32)

请注意,编码后的值与基于字符编码的示例不同。

中间表示形式如下所示。

print([processor.tokens[i] for i in processed[0, : lengths[0]]])

['HH', 'AH', 'L', 'OW', ' ', 'W', 'ER', 'L', 'D', '!', ' ', 'T', 'EH', 'K', 'S', 'T', ' ', 'T', 'AH', ' ', 'S', 'P', 'IY', 'CH', '!']

频谱图生成

Tacotron2 是我们用来从编码文本生成频谱图的模型。有关该模型的详细信息,请参阅 论文。

使用预训练权重实例化 Tacotron2 模型非常简单,但请注意,Tacotron2 模型的输入需要通过匹配的文本处理器进行处理。

torchaudio.pipelines.Tacotron2TTSBundle 将匹配的模型和处理器打包在一起,以便轻松创建管道。

有关可用的捆绑包及其用法,请参阅 Tacotron2TTSBundle。

bundle = torchaudio.pipelines.TACOTRON2_WAVERNN_PHONE_LJSPEECH

processor = bundle.get_text_processor()

tacotron2 = bundle.get_tacotron2().to(device)

text = "Hello world! Text to speech!"

with torch.inference_mode():

processed, lengths = processor(text)

processed = processed.to(device)

lengths = lengths.to(device)

spec, _, _ = tacotron2.infer(processed, lengths)

_ = plt.imshow(spec[0].cpu().detach(), origin="lower", aspect="auto")

/pytorch/audio/ci_env/lib/python3.10/site-packages/dp/model/model.py:306: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

checkpoint = torch.load(checkpoint_path, map_location=device)

/pytorch/audio/ci_env/lib/python3.10/site-packages/torch/nn/modules/transformer.py:379: UserWarning: enable_nested_tensor is True, but self.use_nested_tensor is False because encoder_layer.self_attn.batch_first was not True(use batch_first for better inference performance)

warnings.warn(

Downloading: "https://download.pytorch.org/torchaudio/models/tacotron2_english_phonemes_1500_epochs_wavernn_ljspeech.pth" to /root/.cache/torch/hub/checkpoints/tacotron2_english_phonemes_1500_epochs_wavernn_ljspeech.pth

0%| | 0.00/107M [00:00<?, ?B/s]

14%|#3 | 14.8M/107M [00:00<00:00, 155MB/s]

27%|##7 | 29.5M/107M [00:00<00:01, 54.3MB/s]

35%|###5 | 37.8M/107M [00:00<00:01, 51.5MB/s]

44%|####3 | 46.9M/107M [00:00<00:01, 55.6MB/s]

50%|####9 | 53.4M/107M [00:01<00:01, 51.0MB/s]

60%|#####9 | 64.0M/107M [00:01<00:00, 47.1MB/s]

73%|#######3 | 78.9M/107M [00:01<00:00, 51.7MB/s]

78%|#######8 | 84.1M/107M [00:01<00:00, 46.9MB/s]

89%|########9 | 96.0M/107M [00:01<00:00, 48.8MB/s]

100%|#########9| 107M/107M [00:02<00:00, 44.7MB/s]

100%|##########| 107M/107M [00:02<00:00, 49.9MB/s]

请注意,Tacotron2.infer 方法执行的是多项式采样,因此生成频谱图的过程具有随机性。

def plot():

fig, ax = plt.subplots(3, 1)

for i in range(3):

with torch.inference_mode():

spec, spec_lengths, _ = tacotron2.infer(processed, lengths)

print(spec[0].shape)

ax[i].imshow(spec[0].cpu().detach(), origin="lower", aspect="auto")

plot()

torch.Size([80, 190])

torch.Size([80, 184])

torch.Size([80, 185])

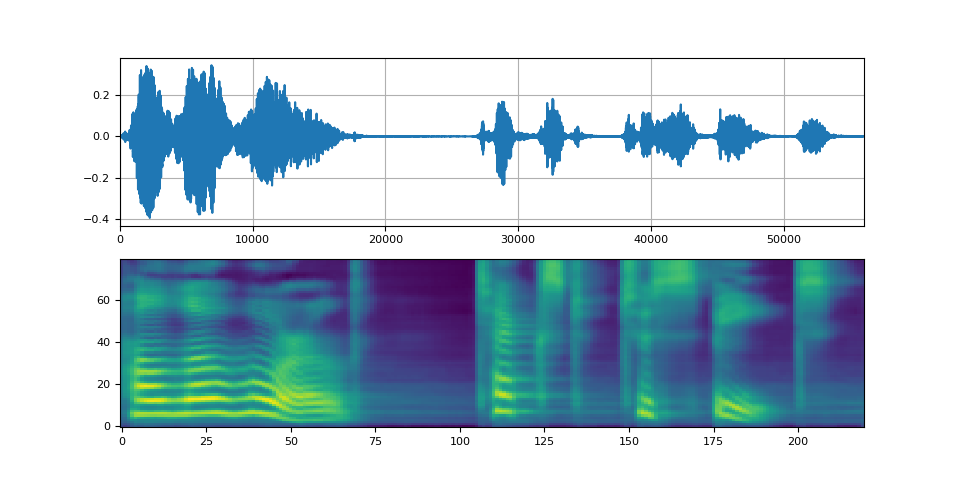

波形生成

一旦生成了频谱图,最后一步是使用声码器从频谱图中恢复波形。

torchaudio 提供了基于 GriffinLim 和 WaveRNN 的声码器。

WaveRNN 声码器

从上一节继续,我们可以从同一个 bundle 中实例化匹配的 WaveRNN 模型。

bundle = torchaudio.pipelines.TACOTRON2_WAVERNN_PHONE_LJSPEECH

processor = bundle.get_text_processor()

tacotron2 = bundle.get_tacotron2().to(device)

vocoder = bundle.get_vocoder().to(device)

text = "Hello world! Text to speech!"

with torch.inference_mode():

processed, lengths = processor(text)

processed = processed.to(device)

lengths = lengths.to(device)

spec, spec_lengths, _ = tacotron2.infer(processed, lengths)

waveforms, lengths = vocoder(spec, spec_lengths)

/pytorch/audio/ci_env/lib/python3.10/site-packages/dp/model/model.py:306: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

checkpoint = torch.load(checkpoint_path, map_location=device)

/pytorch/audio/ci_env/lib/python3.10/site-packages/torch/nn/modules/transformer.py:379: UserWarning: enable_nested_tensor is True, but self.use_nested_tensor is False because encoder_layer.self_attn.batch_first was not True(use batch_first for better inference performance)

warnings.warn(

Downloading: "https://download.pytorch.org/torchaudio/models/wavernn_10k_epochs_8bits_ljspeech.pth" to /root/.cache/torch/hub/checkpoints/wavernn_10k_epochs_8bits_ljspeech.pth

0%| | 0.00/16.7M [00:00<?, ?B/s]

89%|########9 | 14.9M/16.7M [00:00<00:00, 65.0MB/s]

100%|##########| 16.7M/16.7M [00:00<00:00, 46.2MB/s]

def plot(waveforms, spec, sample_rate):

waveforms = waveforms.cpu().detach()

fig, [ax1, ax2] = plt.subplots(2, 1)

ax1.plot(waveforms[0])

ax1.set_xlim(0, waveforms.size(-1))

ax1.grid(True)

ax2.imshow(spec[0].cpu().detach(), origin="lower", aspect="auto")

return IPython.display.Audio(waveforms[0:1], rate=sample_rate)

plot(waveforms, spec, vocoder.sample_rate)

Griffin-Lim 声码器

使用 Griffin-Lim 声码器与 WaveRNN 相同。您可以通过 get_vocoder() 方法实例化声码器对象并传递频谱图。

bundle = torchaudio.pipelines.TACOTRON2_GRIFFINLIM_PHONE_LJSPEECH

processor = bundle.get_text_processor()

tacotron2 = bundle.get_tacotron2().to(device)

vocoder = bundle.get_vocoder().to(device)

with torch.inference_mode():

processed, lengths = processor(text)

processed = processed.to(device)

lengths = lengths.to(device)

spec, spec_lengths, _ = tacotron2.infer(processed, lengths)

waveforms, lengths = vocoder(spec, spec_lengths)

/pytorch/audio/ci_env/lib/python3.10/site-packages/dp/model/model.py:306: FutureWarning: You are using `torch.load` with `weights_only=False` (the current default value), which uses the default pickle module implicitly. It is possible to construct malicious pickle data which will execute arbitrary code during unpickling (See https://github.com/pytorch/pytorch/blob/main/SECURITY.md#untrusted-models for more details). In a future release, the default value for `weights_only` will be flipped to `True`. This limits the functions that could be executed during unpickling. Arbitrary objects will no longer be allowed to be loaded via this mode unless they are explicitly allowlisted by the user via `torch.serialization.add_safe_globals`. We recommend you start setting `weights_only=True` for any use case where you don't have full control of the loaded file. Please open an issue on GitHub for any issues related to this experimental feature.

checkpoint = torch.load(checkpoint_path, map_location=device)

/pytorch/audio/ci_env/lib/python3.10/site-packages/torch/nn/modules/transformer.py:379: UserWarning: enable_nested_tensor is True, but self.use_nested_tensor is False because encoder_layer.self_attn.batch_first was not True(use batch_first for better inference performance)

warnings.warn(

Downloading: "https://download.pytorch.org/torchaudio/models/tacotron2_english_phonemes_1500_epochs_ljspeech.pth" to /root/.cache/torch/hub/checkpoints/tacotron2_english_phonemes_1500_epochs_ljspeech.pth

0%| | 0.00/107M [00:00<?, ?B/s]

14%|#3 | 14.9M/107M [00:00<00:01, 65.9MB/s]

20%|#9 | 21.2M/107M [00:00<00:01, 54.3MB/s]

30%|##9 | 32.0M/107M [00:00<00:01, 60.0MB/s]

44%|####3 | 46.9M/107M [00:00<00:01, 60.9MB/s]

49%|####8 | 52.6M/107M [00:01<00:01, 38.2MB/s]

59%|#####8 | 63.2M/107M [00:01<00:00, 50.0MB/s]

65%|######4 | 69.8M/107M [00:01<00:00, 46.5MB/s]

74%|#######4 | 80.0M/107M [00:01<00:00, 48.0MB/s]

88%|########8 | 94.9M/107M [00:01<00:00, 59.6MB/s]

94%|#########4| 101M/107M [00:02<00:00, 39.2MB/s]

99%|#########8| 106M/107M [00:02<00:00, 38.5MB/s]

100%|##########| 107M/107M [00:02<00:00, 42.2MB/s]

plot(waveforms, spec, vocoder.sample_rate)

Waveglow 声码器

Waveglow 是由 Nvidia 发布的一种声码器。预训练权重已在 Torch Hub 上发布。您可以使用 torch.hub 模块来实例化该模型。

# Workaround to load model mapped on GPU

# https://stackoverflow.com/a/61840832

waveglow = torch.hub.load(

"NVIDIA/DeepLearningExamples:torchhub",

"nvidia_waveglow",

model_math="fp32",

pretrained=False,

)

checkpoint = torch.hub.load_state_dict_from_url(

"https://api.ngc.nvidia.com/v2/models/nvidia/waveglowpyt_fp32/versions/1/files/nvidia_waveglowpyt_fp32_20190306.pth", # noqa: E501

progress=False,

map_location=device,

)

state_dict = {key.replace("module.", ""): value for key, value in checkpoint["state_dict"].items()}

waveglow.load_state_dict(state_dict)

waveglow = waveglow.remove_weightnorm(waveglow)

waveglow = waveglow.to(device)

waveglow.eval()

with torch.no_grad():

waveforms = waveglow.infer(spec)

/pytorch/audio/ci_env/lib/python3.10/site-packages/torch/hub.py:330: UserWarning: You are about to download and run code from an untrusted repository. In a future release, this won't be allowed. To add the repository to your trusted list, change the command to {calling_fn}(..., trust_repo=False) and a command prompt will appear asking for an explicit confirmation of trust, or load(..., trust_repo=True), which will assume that the prompt is to be answered with 'yes'. You can also use load(..., trust_repo='check') which will only prompt for confirmation if the repo is not already trusted. This will eventually be the default behaviour

warnings.warn(

Downloading: "https://github.com/NVIDIA/DeepLearningExamples/zipball/torchhub" to /root/.cache/torch/hub/torchhub.zip

/root/.cache/torch/hub/NVIDIA_DeepLearningExamples_torchhub/PyTorch/Classification/ConvNets/image_classification/models/common.py:13: UserWarning: pytorch_quantization module not found, quantization will not be available

warnings.warn(

/root/.cache/torch/hub/NVIDIA_DeepLearningExamples_torchhub/PyTorch/Classification/ConvNets/image_classification/models/efficientnet.py:17: UserWarning: pytorch_quantization module not found, quantization will not be available

warnings.warn(

/pytorch/audio/ci_env/lib/python3.10/site-packages/torch/nn/utils/weight_norm.py:143: FutureWarning: `torch.nn.utils.weight_norm` is deprecated in favor of `torch.nn.utils.parametrizations.weight_norm`.

WeightNorm.apply(module, name, dim)

Downloading: "https://api.ngc.nvidia.com/v2/models/nvidia/waveglowpyt_fp32/versions/1/files/nvidia_waveglowpyt_fp32_20190306.pth" to /root/.cache/torch/hub/checkpoints/nvidia_waveglowpyt_fp32_20190306.pth

plot(waveforms, spec, 22050)